AGI—Complex, Not Complicated

True Agentic AI? When? I haven’t seen a single AI benchmark that steps into this messy, wicked, unpredictable arena.

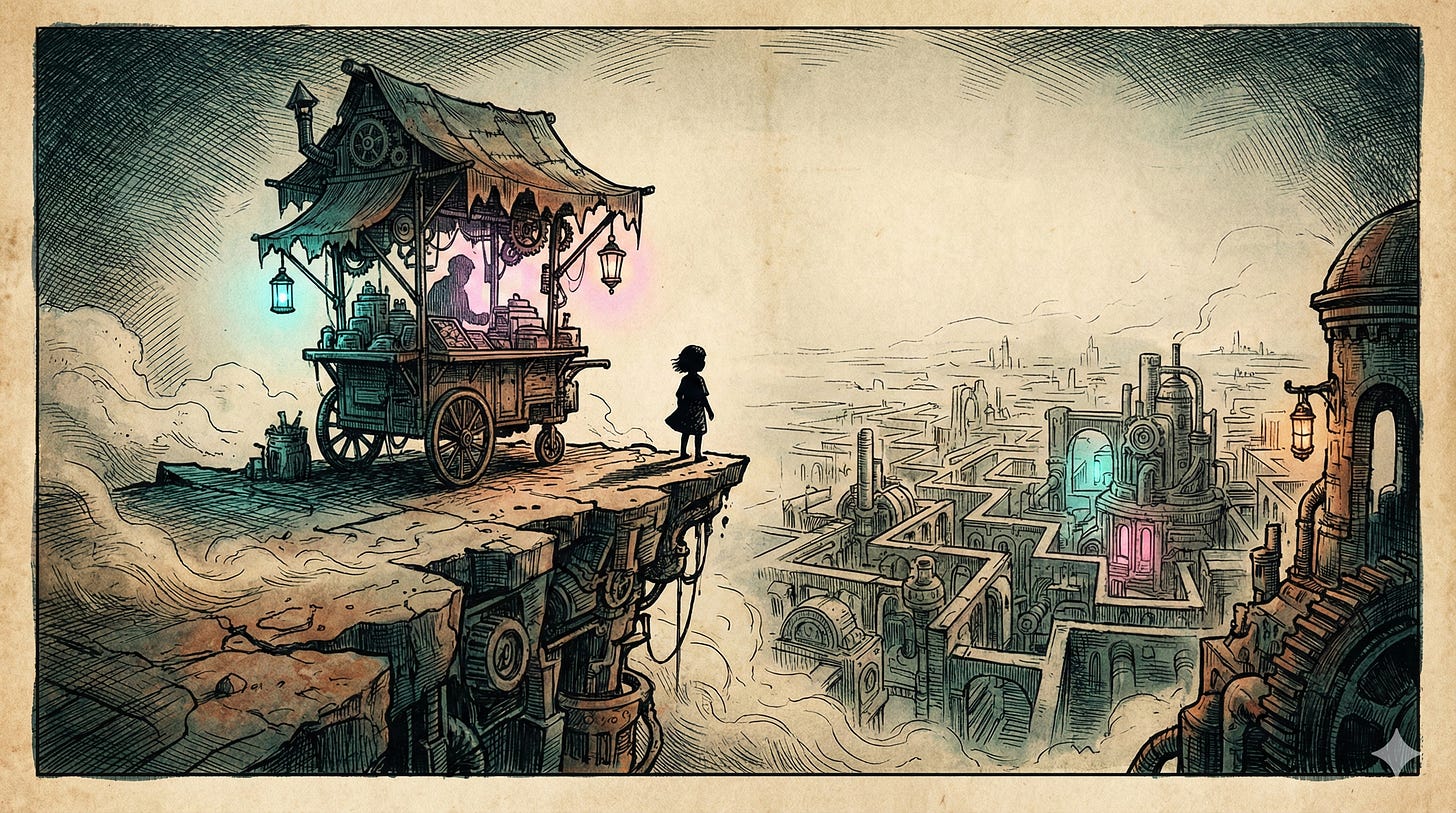

We’ll have AGI when a machine can run a snack shop.

True Agentic AI? When? I haven’t seen a single AI benchmark that steps into this messy, wicked, unpredictable arena.

Every year on King’s Day in the Netherlands, the streets transform into one giant flea market. Citizens of all ages spread their plastic sheets and foil, surrounding themselves with attic junk and unwanted birthday gifts, trying to pawn them off to curious passersby. It’s the nation’s favorite pastime—everyone loves snooping around to see what kind of crap their neighbors are desperate to unload this year. The best part is people try to resell the same junk they bought the year before, but at a higher price tag.

This annual ritual isn’t complete without tens of thousands of kids hawking self-baked cookies, orange juice, or whatever else they managed to swipe from their parents' kitchens. It’s chaotic fun for everyone involved—and here’s a significant clue—anyone can do it. You don’t need a PhD to figure out what might sell, how to display it, what to charge, or how to stop some opportunist from running off with your goods or cash.

But I don’t expect an AI to be a street vendor anytime soon.

What AI Benchmarks Actually Measure

If you believe the AI hype machine, the arrival of AGI (Artificial General Intelligence) is just around the corner. The so-called “evidence” is a non-stop barrage of near-incomprehensible benchmarks showing AIs flexing their reasoning muscles to solve one intricate problem after another. Whether it’s mind-bending puzzles or math equations that make your head spin, ChatGPT, Claude, DeepSeek—they’re all getting better and faster. The conclusion seems obvious, right? AIs are outsmarting humans. Ergo, AGI is nearly here.

But not so fast.

When you dig into these benchmarks, a pattern emerges: the problems AIs are solving are complicated, sure, but they’re also static. These challenges don’t evolve, they don’t shift under pressure, and they certainly don’t throw curveballs the way real life does. There’s no time element that transforms them into complex or chaotic systems. In the Wicked Framework, these challenges aren’t wicked—they’re tame problems, no matter how tricky they look on paper.

Contrast that with the humble chaos of making a few euros on King’s Day: Should you sell cakes or cookies? How much will people pay? How many people will even show up? Do you have enough stock? Where do you stash the money? What if someone steals your stuff? How will you handle the toilet breaks? What if someone wants to haggle or buy out your entire stash at a steep discount?

This isn’t some static puzzle—this is a simple, complex problem. And it’s the exact opposite of the tidy, complicated problems AIs are currently being tested on.

You’re reading The Maverick Mapmaker—maps and models for Solo Chiefs navigating sole accountability in the age of AI. All posts are free, always. Paying supporters keep it that way (and get a full-color PDF of Human Robot Agent plus other monthly extras as a thank-you)—for just one café latte per month.

What Real AGI Would Look Like

Here’s a definition of AGI:

“Artificial General Intelligence (AGI) refers to hypothetical AI systems that can match or exceed human-level performance across virtually any cognitive task, demonstrating general problem-solving abilities comparable to human intelligence. This includes learning, reasoning, and adapting to new situations without task-specific training.” (Claude)

To me, “virtually any cognitive task” and “adapting to new situations” includes simple, complex problems—not just static, complicated ones. Yet, I haven’t seen a single AI benchmark that steps into this messy, wicked, unpredictable arena. Why? Because wicked problems are unsolveable problems. They have no optimum solution.

We won’t achieve AGI just because a machine can solve the world’s most complicated puzzles or crack math problems no human can understand. We’ll have AGI when a machine can run a snack shop, manage a vending cart, or hustle a few bucks on King’s Day. We’ll have AGI when that machine figures out how to protect its properties while hopping over to a charging station mid-afternoon. We’ll have AGI when it can end that day with a modest profit. Because that’s something literally any human—six years and up—can do. Come over to The Netherlands on April 27th, and you’ll see.

We'll have AGI when a machine can run a snack shop, manage a vending cart, or hustle a few bucks on King's Day.

No, I don’t expect to see any AGIs in the streets on King’s Day this year.

But I’ll be here, waiting for AGI.

Jurgen, Solo Chief

P.S. Think AGI will arrive via benchmarks? Tell me why I'm wrong in the comments.

P.S. If anyone is wondering, we’ll hit artificial super intelligence (ASI) when an AI can run that snack shop or vending cart on less than 20 Watts—because that’s how little energy the human brain burns throughout the day.

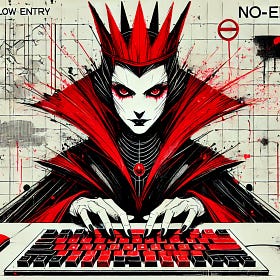

The Red Queen Says No to AI Agents

The future of work isn’t just hype or doom. It’s about human-robot-agent collaboration—and those who master that will thrive. I’ll be your guide and skeptical optimist in a rapidly changing world. Subscribe now and get ahead.

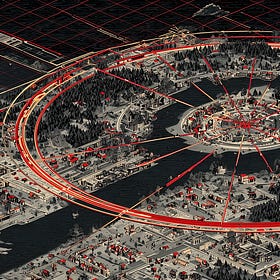

The Agentic Organization

Humans are the bottleneck. Agentic organizations separate AI workflows from human processes, like ring roads that bypass city centers. Dual-lane processes should prevent intellectual traffic jams.

The Four Tensions of Sociotechnical Systems

Every team faces the same fundamental question: How do you balance freedom with coordination, spontaneity with discipline, performance with agility, and self-actualization with shared purpose?

I'll call it AGI when it moves from "Yes, there is a solution to your weird complex problem, this is so doable, you will love this extra fresh solution!" to "Here is the realistic, achievable way to solve this issue you mentioned, and I can implement it if you approve these steps:...". Right now, after the first, always comes this instead: "In fact I am unable to provide a way to resolve this issue. I miscalculated. I was wishful thinking. I apologize for sounding too confident when I didn't have a solution".

Thank lord for Amazon's return policies, the amount of times I had to return some audio adapters just because AI swore this one will do the job is bringing me shame.

Customers leaving mixed reviews is a real headache when you're trying to build trust. You might try HiFiveStar to monitor feedback and respond quickly across sites. That tends to stabilize ratings and makes it easier to spot recurring issues.