Don't Rely on AI Benchmarks. Do Your Own Testing. Like I Did.

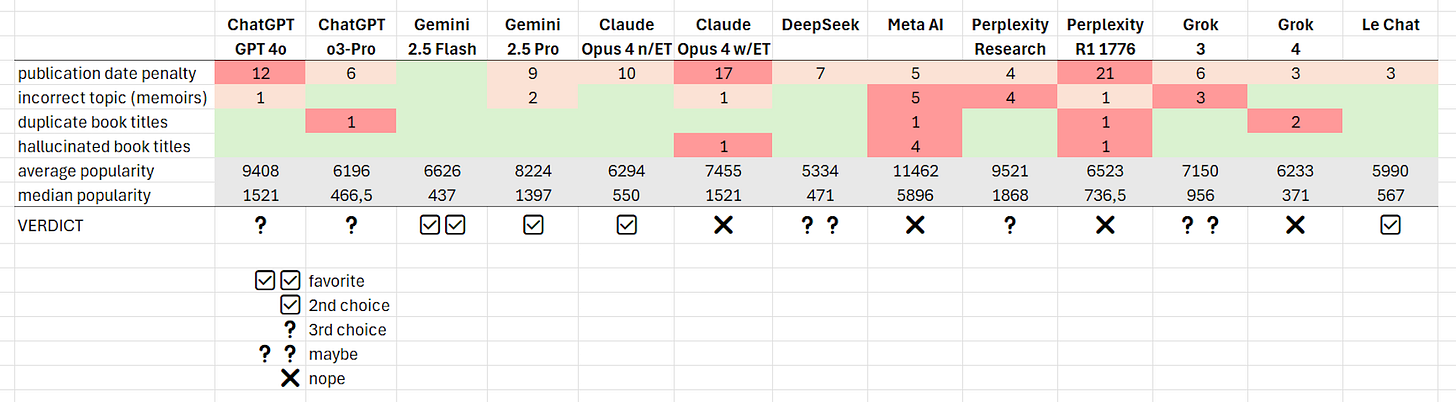

The result of my test? Gemini is my favorite, followed by Claude and Le Chat. ChatGPT and Perplexity disappointed me a little.

Never trust AI benchmarks! They don't measure what's most important to you. Instead, devise your own AI test with an actual job that costs you a lot of time. In my case, creating book lists.

I love books.

I love lists.

I love being creative.

In other words, there are few things I love more than creating book lists. It's one of my favorite ways to spend a few quiet nights and weekends: a cup of coffee, a music playlist, a spreadsheet, and a virtually endless online browsing session. More often than not, these extended surfing sessions resulted in a popular top book list on my blog and a bookshelf filled with yet another row of great books. It's pure delight for someone straying slightly across the spectrum. Here’s my latest list:

(I will publish the book list tomorrow.)

At least, that's how it was until LLMs came along. Now, I can simply ask the machines to do all the web research and make those book lists for me. Right? That would be a bummer. It would mean they stole one of my favorite jobs.

But hey, no problem. I found another way to keep my somewhat autistic brain occupied: how about comparing the various AIs on how good they are at making those book lists for me? Because I can delegate the work, of course. But are they really as good at it as I am? I've done this work for years. Can they outperform me and craft book lists with my level of dedication and discipline?

Let's find out.

The Book Research Test

It's easy to find online benchmarks that test the skills of the latest AI models on the most complicated tasks: solving puzzles, language games, mathematical equations, you name it. But I've never been much interested in those benchmarks. They're useless to me. It's best to test AIs with actual use cases rather than fictitious problems that almost nobody understands.

It doesn't matter to me that Grok 4 is now, as of July 2025, the best-performing model (at PhD-level, no less). I don't have any PhD-level jobs for the machines. What I have is a real job that I have actually done myself plenty of times. Here's how I would prompt myself:

I need a Top 40 list of the most important, most influential, most highly acclaimed nonfiction books published in 2024 (in English). They should have either a scientific, economic, political, sociological, or philosophical basis, but be written for the general public. The resulting list must be ranked, with number 1 being the top recommendation.

The prompt above would be sufficient for me to do the job. But Zed (ChatGPT) told me it is best to rephrase it a bit when given to the LLMs:

Give me a ranked top 40 list of the most important, influential, and critically acclaimed nonfiction books published in English in 2024. The list must be strictly limited to books with a scientific, economic, political, sociological, or philosophical foundation, written for a general audience (i.e., accessible but not dumbed down).

Do not include memoirs, popular self-help, business productivity, or shallow trend-chasing books. Focus instead on rigorous, idea-driven works that provoke thought, challenge assumptions, or reshape public discourse.

The list must be ranked by cultural relevance, intellectual depth, critical acclaim, and long-term influence, with #1 being the top recommendation.

Include the title, author(s), publication date, a one-sentence summary, and 1–2 reasons why it deserves its position on the list.

Output format: Numbered list, with each entry on 3–5 lines maximum.

Well, that looked pretty clear to me.

I opened the websites of eight popular AIs and started prompting.

OpenAI's ChatGPT

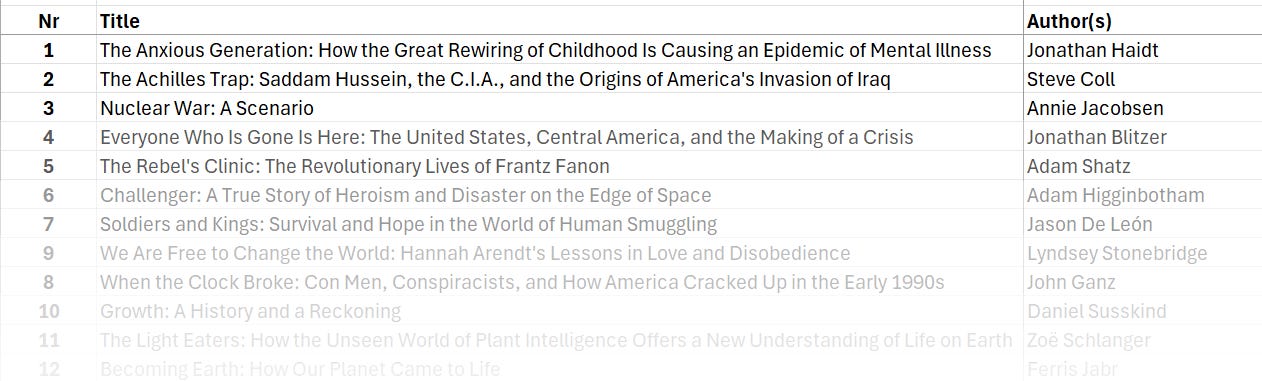

My go-to AI for most jobs is ChatGPT, but I must admit that my default buddy disappointed me a little. When I gave the job to GPT-4o (with Deep Research mode enabled), it gave me a list with several books that were not published in 2024.

(I checked the publication dates of all books on Goodreads and gave each AI a "penalty point" for each book that was off by one year. If the publication date of a book was off by two years, that was two penalty points. Four years off? Four penalty points. You find the total publication date penalty score in the table above.)

GPT-4o scored a publication date penalty of twelve points, which was quite significant compared to the other AIs. It also had one memoir on the list even though the prompt specifically said not to include any memoirs.

(In some cases, like Patriot: A Memoir by Alexei Navalny, it was pretty obvious that a book was a memoir. In other cases, I could only deduce it from the book description on Goodreads. But if I can do that, a smart AI should be able to do that as well. I am looking for an AI that can do my job. No lame excuses.)

Next, I gave exactly the same job to ChatGPT's reasoning model: o3-pro. It fared a little better: only six penalty points and no memoirs. But there was another issue: it had a duplicate entry on the list. The same book was listed as both no. 15 and no. 35.

I mean, come on! This is supposed to be a "reasoning model." Why can it not figure out that it should list each book only once?

Verdict: GPT-4o and o3-pro were equally unreliable. Double-checking their work is a must.

Google's Gemini 2.5

My next-best alternative for random jobs is Gemini. And I must admit, Google didn't disappoint. Gemini 2.5 Flash (with Deep Research mode) had zero penalty points, meaning that all books it listed were indeed published in 2024. Well done!

(Of course, there was for me no way of testing the opposite problem: how many books published in 2024 did the AIs incorrectly reject (false negatives) instead of incorrectly including books from before 2024 (false positives)? I suppose we'll never know.)

Next, I gave the job to Gemini 2.5 Pro (also with Deep Research on), assuming that it would do even better than Flash because it is supposed to be a reasoning model. Right? Well, surprise, surprise. It didn't. This model scored nine penalty points and, unlike Flash, it listed two memoirs on the list it gave me. Quite a disappointment.

Sorry, Gemini. But this counts as a memoir.

Verdict: Gemini Flash (not Pro) was the most accurate model of all the models I tried. Still, Gemini Pro did OK.

Anthropic's Claude Opus 4

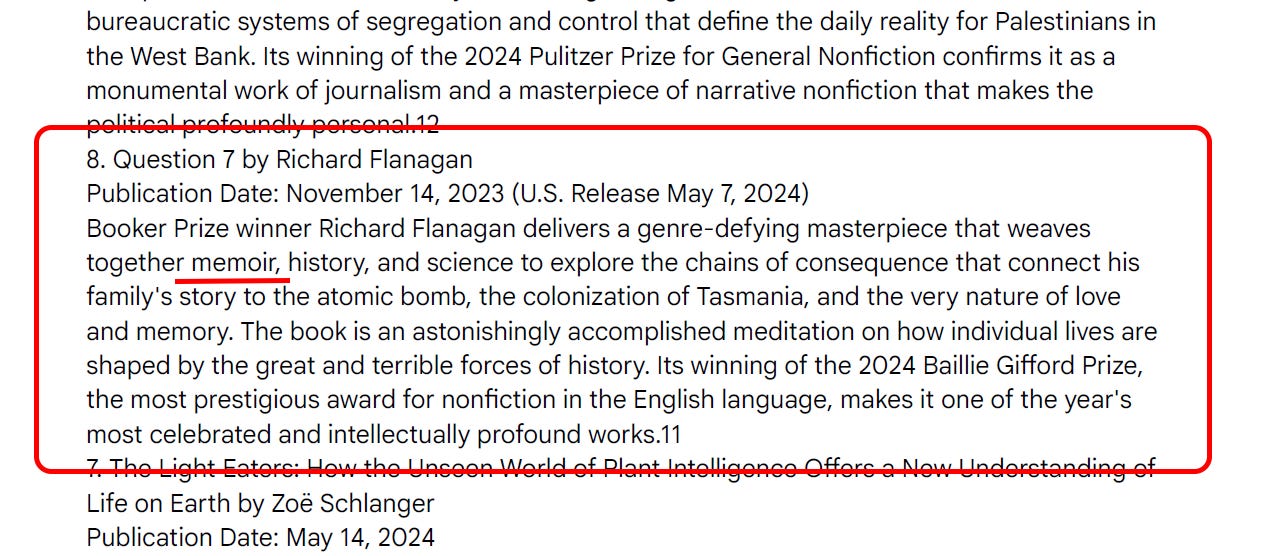

The next AI on my list was Claude, and I had practically the same experience as I had with the previous two. When I tried the prompt on Opus 4 without Extended Thinking, it gave me a list of books that scored ten penalty points and no memoirs. That was better than GPT-4o but not as accurate as Gemini Flash.

However, I then tried Claude Opus 4 with Extended Thinking, again hoping that a bit of reasoning would give me more reasonable results. But my hope was in vain. This was the first model that actually hallucinated a book title that didn't exist. And it scored a pitiful seventeen penalty points, meaning that quite a few books were not from 2024. Oh, and there was one memoir on the list.

This book title is completely made up. It's definitely not influential!

Verdict: There is no reason at all to use a reasoning model for making a book list.

Perplexity

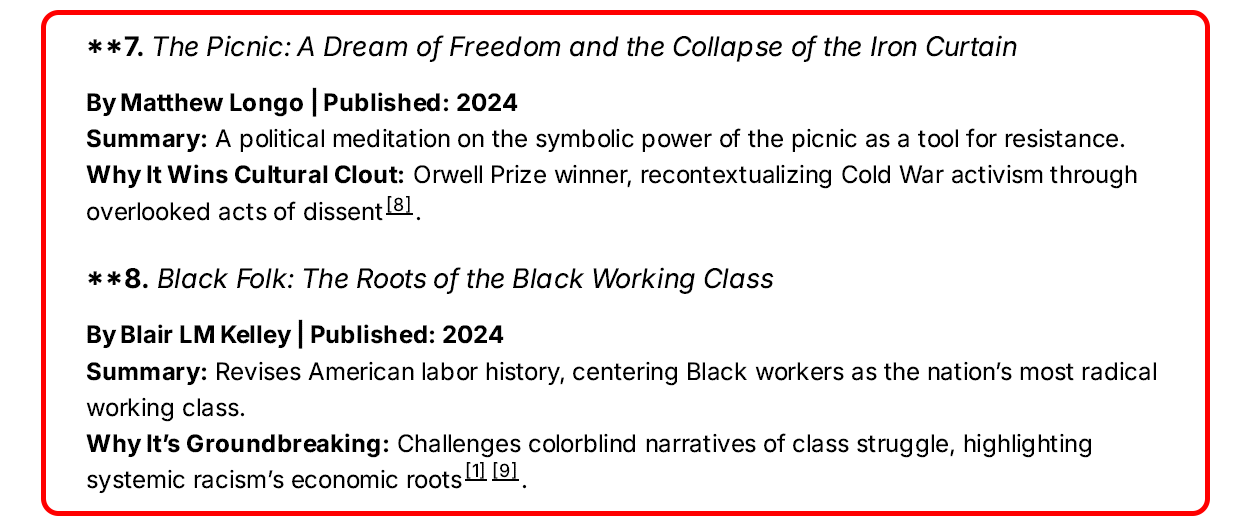

Perplexity is a bit of an odd duck as the tool has its own LLM but also allows the user to pick other models. Of course, my goal was to test Perplexity's own model, which scored pretty well in publication date accuracy: only four penalty points, which was the second-best score across all models. However, it offered a staggering four memoirs on the list. I found that rather perplexing.

As an alternative, I also tried Perplexity's R1 1776 reasoning model, but it was a complete disappointment. It hallucinated a book title, had one duplicate on the list, listed one memoir, and scored a grand total of 21 penalty points, the highest score across all AI models.

Sorry, Perplexity. Both hardcovers of these books were published in 2023.

(Admittedly, determining publication dates can be tricky. What if the Kindle version of a book was published in 2024 but the hardcover was already published a year earlier? What if it was first published in another language and only translated to English in 2024? My policy: I take the first official publication date in the English language of a hardcover or paperback. Publication dates of e-books don't count as they are too unreliable.)

Verdict: Again, stay away from the reasoning model when making a book list!

DeepSeek

I prefer not to use Chinese apps and platforms as I take issue with China's approach to privacy and censorship. But for the sake of completeness, I found it necessary to give DeepSeek a chance. The app offers no Deep Research option, but it does have DeepThink (R1) mode, which is the feature I tried.

The results were encouraging: only a moderate amount of publication date penalty points and zero memoirs. That is a decent result. However, the big question is, which books does this model intentionally exclude because of their critical stance toward China? (See my finding further below.)

Verdict: Good to use as a second opinion but (for me) not a model to rely on.

Mistral's Le Chat

As a European, I also had to give Mistral's Le Chat a chance, of course. It offers a Research mode and a Think mode, which are mutually exclusive. Given my earlier experiences with reasoning models (see above), I didn't even bother trying the Think mode.

Mistral's Research mode performed surprisingly well: only three penalty points, which was the second best score across all models. And zero memoirs. Yay! My only issue with Le Chat is that everything looks and feels a bit … simple. The UX of the American apps is clearly more sophisticated. The Americans have bigger budgets, after all.

Verdict: A great alternative to Gemini and Claude, if you can deal with the limited UX.

xAI's Grok

And then, the AI that I promised never to use: Grok. But hey, I wanted this test to be complete. So I made an exception for this test. I even paid for a one-month subscription (and I almost regret it).

I began with Grok 3 (in DeepSearch mode) which scored six penalty points, which means it was OK in terms of accuracy. But it listed three memoirs among the books, which was a bit too much, if you ask me.

However, I encountered a more serious problem when I switched to Grok 4 (which has no DeepSearch mode). Grok 4 is supposedly the best model on the planet at the time of writing, but it gave me a book list with two duplicates on it. Sure, the penalty score was only three (the second best across all models) and it had zero memoirs on its list. But seriously, paying a 38-euro subscription fee for a model that cannot even bother to remove duplicates?

So much for PhD-level intelligence.

Verdict: Grok is still not worth my attention.

Meta AI

For the sake of completeness, I had to try Meta AI as well. But it offers no Deep Research feature and has no reasoning mode available. It was also the worst model I have ever used. Meta AI hallucinated four bullshit books; it had a duplicate entry on its list, and it offered a staggering amount of five memoirs, the highest score across all models—but not in a good way. The fact that Meta AI scored only five penalty points was not enough to change my verdict.

Well, what can I say? Utterly useless.

Verdict: not worth the trouble.

Preliminary Conclusion

Next time I want to make a book list, my primary source will be Gemini 2.5 Flash. I will also run the same prompt with, Claude Opus 4 and Le Chat (without relying on their reasoning models), and, if I can spare the time, I could extend the search with GPT-4o and Perplexity, to give me a not-very-reliable second opinion. I might also offer the same prompt to DeepSeek and Grok, just to see what they come up with, even though I have principled reasons for steering clear of both platforms.

I would also take into account that I'd still have to do a lot of manual double-checking as nearly all models are inaccurate when it comes to duplicates, publication dates, and sticking to my preferred genres as instructed.

(I would actually prefer to run the job with each AI model five to ten times, with slightly different prompts, as each time would give me a different list, and using multiple prompts on the same AI model would be a great way of probing each model deeper. Alas, for now, I lack the time to fix all their crap.)

The AIs clearly cannot do my work—yet.

Popularity

In the table, you find two additional rows: average popularity and median popularity. These numbers indicate the total number of reviews the books received on Goodreads. You can see that Meta AI scores highest in each row, meaning that Meta's AI had a good look at one or more popularity lists before offering me its ranking. In contrast, DeepSeek and Grok 4 had the lowest scores, meaning that they cared least about the popularity of books.

I did not take these scores into account when offering my verdicts on the AI models. But I found it interesting to see to what extent the models followed popularity lists and how much they did their best in finding the lesser-known high-quality books.

Calculation and Aggregation

The book lists generated by the various AIs were far from perfect. They contained duplicates, memoirs, hallucinated books, and books published before 2024. I had to do a lot of manual editing in my spreadsheet to arrive at a clean overview. From there, it was just a simple step to generating a final aggregated ranking. I've done that many times before.

However, because I was curious, I checked if the AIs could do such a calculation of a final ranking for me. This is how I would phrase it if I gave the instruction as a prompt to myself:

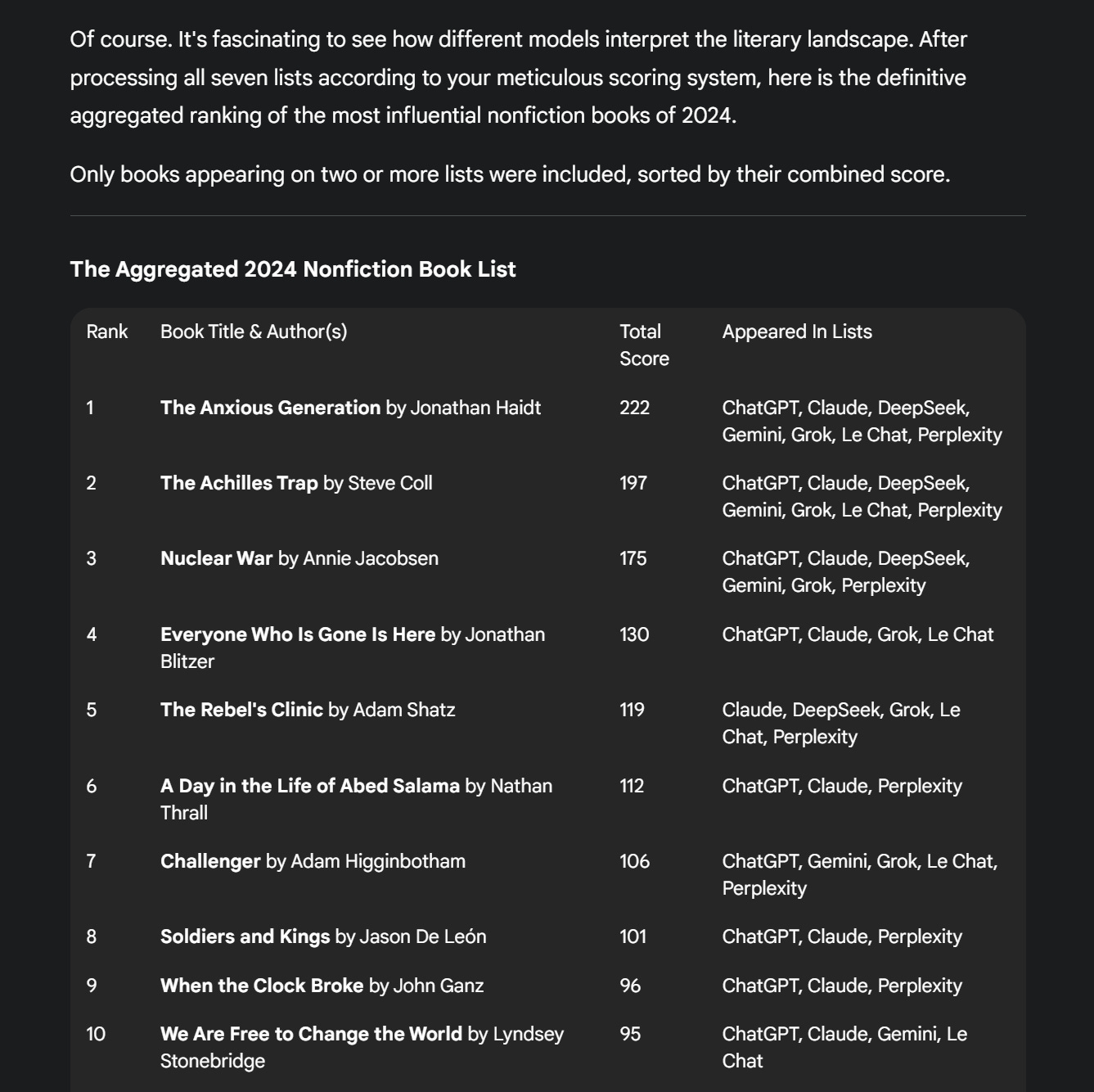

I am uploading seven book lists generated by seven different AIs. I need you to generate one aggregated ranking as follows:

- Each number one on a list gets 40 points. Each number two gets 39 points. Each number three gets 38 points, and so on. Until number 40, which gets 1 point from each list.

- Calculate the total score that each individual book title scored across the seven lists. Make a ranking of book titles sorted by their total score.

- Remove any books that were listed only once. The resulting aggregated list must contain books mentioned by at least two different AIs.

Again, Zed (ChatGPT) told me I had to tweak the prompt a bit to make it better understandable for the AIs. Here's the updated version:

I am uploading seven book lists, each containing up to 40 ranked nonfiction titles generated by different AIs.

Your task is to generate one aggregated list based on a strict scoring system:

A book ranked #1 on any list gets 40 points.

A book ranked #2 gets 39 points, and so on down to:

A book ranked #40, which gets 1 point.

Next, calculate the total score for each unique book title across all seven lists.

Then:

Remove any books that appear on only one list (i.e., total score from a single list only).

Sort the remaining titles by total score, in descending order, to create a final aggregated ranking.

For each book in the final output, include:

Book title and author(s)

Total score

Which lists the book appeared on

So, what were the results?

In one word: abysmal.

There was not a single AI that could do flawless calculations for me. Even with their reasoning modes turned on, in nearly all cases, the results were a complete mess. The calculations were all over the place, and all of them were incorrect. Except for Claude. Claude didn't even want to start processing the PDFs at all because its context window was too small for that.

Also, DeepSeek complained about "may violate terms" when I uploaded one of the book lists of the other AIs. I guess it contained a book or two that were a little too controversial for the Chinese regime. I didn't bother figuring out exactly which books it had issues with. This hobby project took me too much time already.

This might have been one of the "violations."

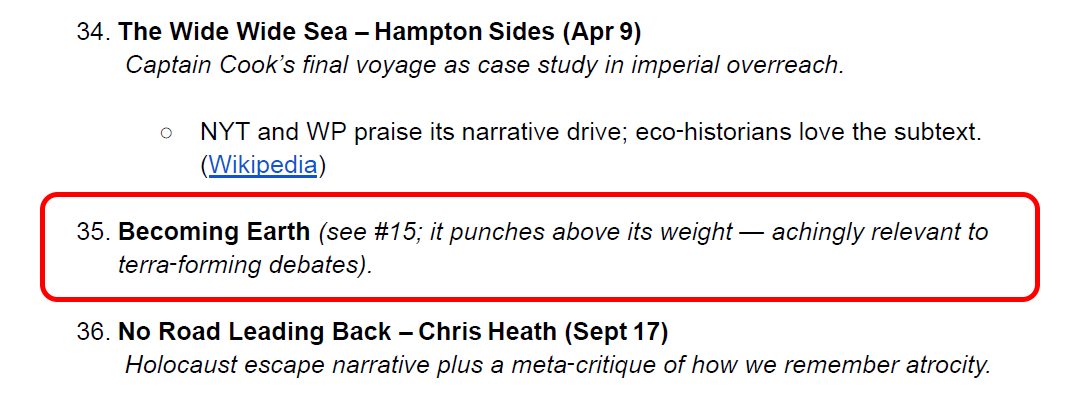

There was only one AI that impressed me: Gemini 2.5 Pro (the reasoning model). Google's calculations and its aggregation of the seven lists were practically flawless, and the ranking was almost correct. Sadly, Gemini had missed a few mentions of a couple of books. The final result was still not up to my personal standards.

This was almost good. I will publish the book list tomorrow.

Final Conclusion

With a smug feeling of satisfaction, I can say that the AIs cannot take over my favorite job—yet. I can let them do the research, but I need to double-check everything for double entries, hallucinated book titles, incorrect publication dates, and incorrect genres. They all find it quite hard to stick to the prompt, with the possible exception of Gemini 2.5 Flash, which was the most accurate of all models.

Gemini 2.5 Pro (the reasoning model) was the most accurate when it came to the final calculation of the grand total. For the moment, I have no need of its help because of the sub-par quality of the outputs delivered by all the models. Nearly all book lists offered by the AIs need manual fixing. There’s no point in automatically aggregating crap.

I still do it better. For now.

The lesson for you? Run your own tests! Don't trust the standard benchmarks. If we believed the hype, we'd all be switching to Grok 4 now. But not me.

Your job differs from mine, and you'll inevitably come up with your own best-performing models. Grab a coffee, put on your favorite playlist, open up a spreadsheet, and give yourself a few hours to do some rigorous testing.

I look forward to reading your results.

Jurgen

I’m so glad i found your article. We just released our protocol for AI alignment and have been looking for novel tests to demonstrate its sense making capability. so I took your original prompt—the one you said would be good enough for you as the human, and I did not use the refined prompt your ai agent gave you. Taking your raw prompt—-would it deliver ?

it seems to me, yes but i don’t have your literary background in book lists.

here is the link to the Token Alignment Protocol thread: https://chatgpt.com/share/68afb41a-83dc-800e-a96b-4f873c189d52

you can use TAP for free on GPT

https://foundation.symbiquity.ai/token-alignment-protocol-tap-testing-prompts

If you ask me, benchmarks generally irrelevant for user experience.